Stop Asking How Not to Be Replaced by AI

The real question is whether you move from “producer” to “owner.”

I get a version of the same message constantly: “What should I do to not be replaced by AI?”

People expect comfort. They want a checklist that keeps their current role intact. I’m not going to do that. The reality is the parts of your job you’ve been rewarded for—typing code, churning tickets, filling in docs—are getting cheap fast. If you’re clinging to those as your identity, it’s going to hurt.

A lot of the anxiety comes from a quiet assumption: “My job exists because I produce output.” Code output. PRDs. Jira updates. Spreadsheets. Slides. Those are artifacts. AI is turning artifacts into commodities. And when something becomes a commodity, companies stop treating it like a career moat.

So when someone asks me how not to be replaced, what I hear is: “How do I keep being paid for the parts of my job that are becoming cheap?”

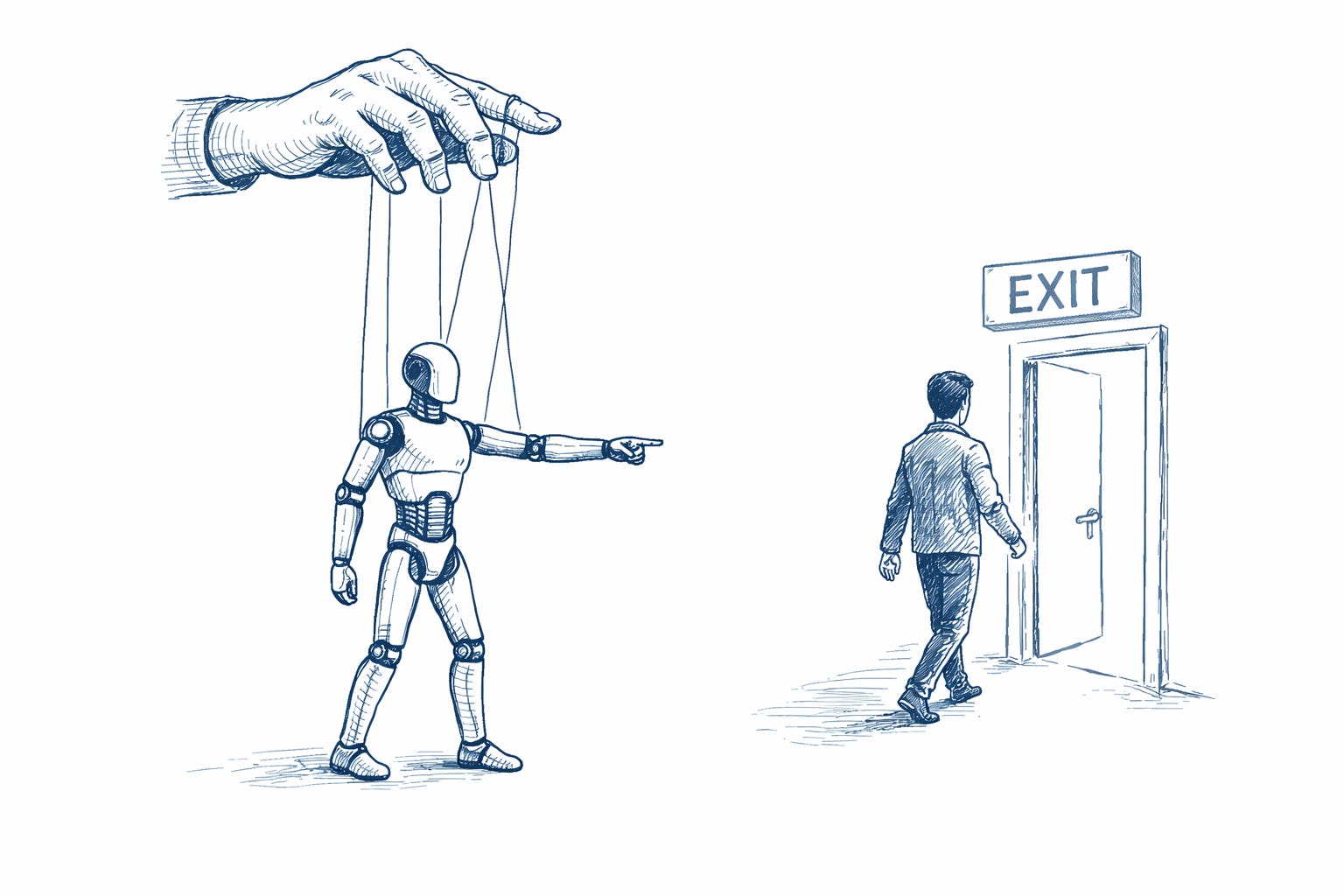

That framing is the trap.

The question worth asking is: “If the typing is almost free, what’s left that a team still needs a human to own?”

There’s a blunt answer: ownership under uncertainty. Judgment when the trade-offs are real. Clarity when everyone is spinning. And the willingness to carry the outcome when it works and when it doesn’t.

If you’re a developer who loves coding more than problem-solving, this shift is going to land hard. Coding is a clean feedback loop. You write, it compiles, tests pass, dopamine hits. AI is about to outpace you in that loop. Faster drafts. Faster refactors. Faster test scaffolds. Faster “good enough.” If your value is “I can produce code,” you’re signing up to compete with a machine that never gets tired and never needs a meeting-free morning.

If you’re a PM who hides behind frameworks and rituals, same story. If your job is mostly translating conversations into templates, running ceremonies, and producing documents, AI will do that work at a level that’s uncomfortable to watch. Not perfect, but plenty good for the organization to stop paying a full-time human to be a document factory.

What doesn’t get replaced as easily is the part where someone has to decide.

Decide what problem is worth solving. Decide what “done” means. Decide what to cut when time runs out. Decide what risk is acceptable. Decide what we’ll measure in the real world. Decide how we’ll respond when reality disagrees with the plan.

Those decisions are the job. The artifacts were just the visible exhaust.

This is where I think smart people get stuck: they treat “having a job” as the goal, instead of being the kind of person teams depend on when the work gets messy. They look for tactics to protect their current tasks instead of building the ability to own outcomes.

And yes, that means letting go of some identity.

If you’ve built your self-worth on being the person who can crank out code, you’re going to feel threatened. If you’ve built your self-worth on being the person who can run the process, you’re going to feel threatened. That’s not because you’re weak. It’s because you attached your value to the part that’s being automated.

The switch you need is simple to say and hard to live: stop thinking of yourself as an artifact producer. Start thinking of yourself as an outcome owner.

Outcome owners do a few things consistently, regardless of title.

They frame problems in plain language that the whole team can agree on. They define success in a way you can measure after release, not just in a demo. They spot constraints early—performance, security, cost, usability, migration pain, integration ugliness—before the team commits to the wrong path. They make trade-offs explicit instead of letting them hide in the backlog. They reduce “unknowns” before the team burns weeks building the wrong thing.

And they’re calm when the plan breaks, because they planned for that.

AI can help with every one of these steps. But it can’t be the accountable owner. It can’t be the person who says, “We’re doing this, not that, and here’s why,” and then lives with the outcome.

So what should you actually do?

Not “learn AI.” Everyone will learn AI. That’s table stakes.

You train yourself to operate one level higher than the tool.

For developers, that means you don’t win by writing more code. You win by making better calls about what code should exist, what shouldn’t, and what risks you’re taking on by shipping it. You get good at reviews that catch real problems: edge cases, failure modes, operational blind spots, sloppy assumptions. You become the person who can take a vague goal and turn it into a plan that won’t collapse under real usage.

For PMs, that means you stop being a backlog curator and become a decision maker. You get sharp about what matters to users and the business, what doesn’t, and why. You can say no without hiding behind process. You can hold a line when stakeholders try to turn every idea into a “must-have.” You can create focus when there are ten competing priorities and everyone has a plausible argument.

Here’s a practical way to force the shift without turning it into a motivational poster.

Run one project with a rule: the AI does the drafting, you do the owning.

Let the tool write the first pass: the code, the tests, the PRD, the rollout note, the API doc. Your job is to produce the pieces that AI can’t fake well:

A one-paragraph problem statement that a skeptical teammate would agree is the real problem.

A definition of success that includes what you’ll measure after release.

The top failure modes and how you’ll detect them quickly.

The trade-offs you’re making and what you’re explicitly choosing not to do.

A decision log: what you decided, when, and based on what evidence.

When you work this way, two things happen. First, you ship faster, because the typing stops being the bottleneck. Second, your value becomes obvious in a different way: you’re the person reducing chaos and making the work coherent.

If you do this and feel a little lost, that’s normal. It means you were relying on output as your proof of worth. Most of us did, because it’s measurable and praised. Now the measuring stick is shifting.

You don’t avoid being replaced by protecting your current tasks. You avoid being replaced by becoming the person teams trust with ambiguity, trade-offs, and outcomes.

And if that doesn’t appeal to you—if what you really love is the craft of typing code or filling in docs—then be honest about it. There’s nothing wrong with loving the craft. Just don’t confuse loving the craft with having a defensible career moat in a world where the craft is being automated.

The people who do well in the next few years won’t be the ones who write the most code or produce the cleanest templates. They’ll be the ones who can take messy problems, make them tractable, and drive them to a measurable result while everyone else argues about the right tooling.

This really resonated with me! I’m hopeful that some companies in my city are starting to work this way, but honestly, it’s been difficult to tell where that’s actually happening. I’ve recently started a new job search as an experienced dev, and leveraging AI is something I’m actively looking for. We’ll need to adapt as developers, both in how we work and in how the required skill set is evolving.

I’ve only been on the job market for a little over a week, but so far, none of my conversations with recruiters have touched on any of this. I’ve been told AI isn’t allowed in technical tests and that they’ll “catch” me if I use it.

It feels like there’s still very little nuance around how AI can be used as a practical coding tool, and hiring processes haven’t really caught up with the skills you’re describing here. I’m interested in working with companies that get this and aren’t recruiting "the old way".